Job Monitoring in Slurm

Monitoring your job is an important aspect of making sure you are requesting the right resources when you begin your job. It may be very hard to know how much to request when you first run a program, but if you monitor it, you can make more informed decisions in the future. Our acceptable use guidelines require that you do not request for more resources than your application can use - doing so would waste resources and prevent others using them.

There are a few options to monitor jobs in Slurm.

jobperf: A tool custom written for Palmetto that provides easy jobs statistics and live CPU, memory, and GPU monitoring.squeue: List currently running jobs.seff: Return summary job performance metrics.sacct: List completed jobs and investigate resources used by individual Slurm steps.- Connect to nodes: You can connect to nodes

via SSH and run tools like

htopandnvidia-smi.

Using jobperf with Slurm

The jobperf command provides job statistics and live monitoring tools for both

Slurm and PBS. With it, you can see live performance metrics for each node and

GPU while a job is running.

jobperf Command Line Usage

The basic usage is to pass in the job ID (either PBS or Slurm):

jobperf 1214642

This will print out summary information about the job as well as summary CPU and memory resource usage. If the job is running, it will also fetch current memory, CPU, and GPU usage for each node.

For example, here is the output for a job that has completed:

Job Summary

-----------

Job Name: cluster-openfoam-4x16-fdr

Nodes: 4

Total CPU Cores: 64

Total Mem: 248gb

Total GPUs: 0

Walltime Requested: 12:00:00

Status: Finished

Overall Job Resource Usage

--------------------------

Percent of Request

Walltime Used 12:00:24 100.06 %

Avg CPU Cores Used 61.42 cores 95.96 %

Memory Used 27084840kb 10.42 %

At a glance, I can tell a few things about this job:

- 100% of walltime was used. This job didn't actually finish, it was likely killed by the scheduler. It is good to not request too much walltime, but it appears this job needed more time. If I run this again, I should bump up the walltime.

- I used 96% of requested CPU cores. This is excellent utilization. I won't have

to change

ncpusat all. - I used only 10% of my memory requests (~27GB of 248GB). It is important to give yourself a safety buffer when requesting memory (Slurm will kill jobs that reach their requested memory), but using only 10% of what was requested is wasteful. When running this job again, I should decrease the memory requests. If I use a safety margin of 50%, then that indicates my request should be for 27x1.5 = ~40GB total, or 10GB per node. Reducing this request would free up over 200GB for other users of the cluster!

When jobs are still running, the current CPU, memory, and GPU usage is displayed for each node. For example here are some results of running on a VASP job:

Job Summary

-----------

Job Name: vasp_gpu

Nodes: 2

Total CPU Cores: 4

Total Mem: 40gb

Total GPUs: 2

Walltime Requested: 01:30:00

Status: Running

Overall Job Resource Usage

--------------------------

Percent of Request

Walltime Used 00:02:31 2.80 %

Avg CPU Cores Used 1.03 cores 25.66 %

Memory Used 12589808kb 30.02 %

Average Per Node Stats Over Whole Job

--------------------------

CPU Cores Memory

Node Requested Used Requested Used

node0387 2 1.06 (53.12 %) 20.00 GB 6.01 GB (30.06 %)

node0284 2 1.06 (53.21 %) 20.00 GB 6.09 GB (30.47 %)

Fetching current usage...

Current Per Node Stats

--------------------------

CPU Cores Memory

Node Requested Used Requested Used

node0387 2 1.01 (50.68 %) 20.00 GB 6.01 GB (30.06 %)

node0284 2 1.01 (50.69 %) 20.00 GB 6.09 GB (30.47 %)

Node GPU Model Compute Usage Memory Usage

node0387 NVIDIA A100 80GB PCIe 98.0 % 7404 MB

node0284 NVIDIA A100 80GB PCIe 98.0 % 8296 MB

From this we can tell a few things about the job:

- It is using one core on each node (2 were requested, so one core on each node is idle).

- It is making very good use of the GPUs, each GPU requested is near 100% utilization.

- It is using about 30% of memory requested. The may fluctuate over the course of the job so it may be better to wait until the job is complete before we decide we can reduce the memory requests.

You can also pass the -w option (jobperf -w <job id>). This will print all

the same information as without the -w, but it will keep requesting and

printing current per node CPU, memory, and GPU statistics.

jobperf HTTP Usage

If you'd rather look at the job stats in a web interface, you can do so with the

-http option. This is particularly useful with the -w (watch) option on jobs

that are still running:

jobperf -http -w 2977

It should print out a message like:

Started server on port 46543. View in Open OnDemand:

https://openod.palmetto.clemson.edu/rnode/node0401.palmetto.clemson.edu/46543/

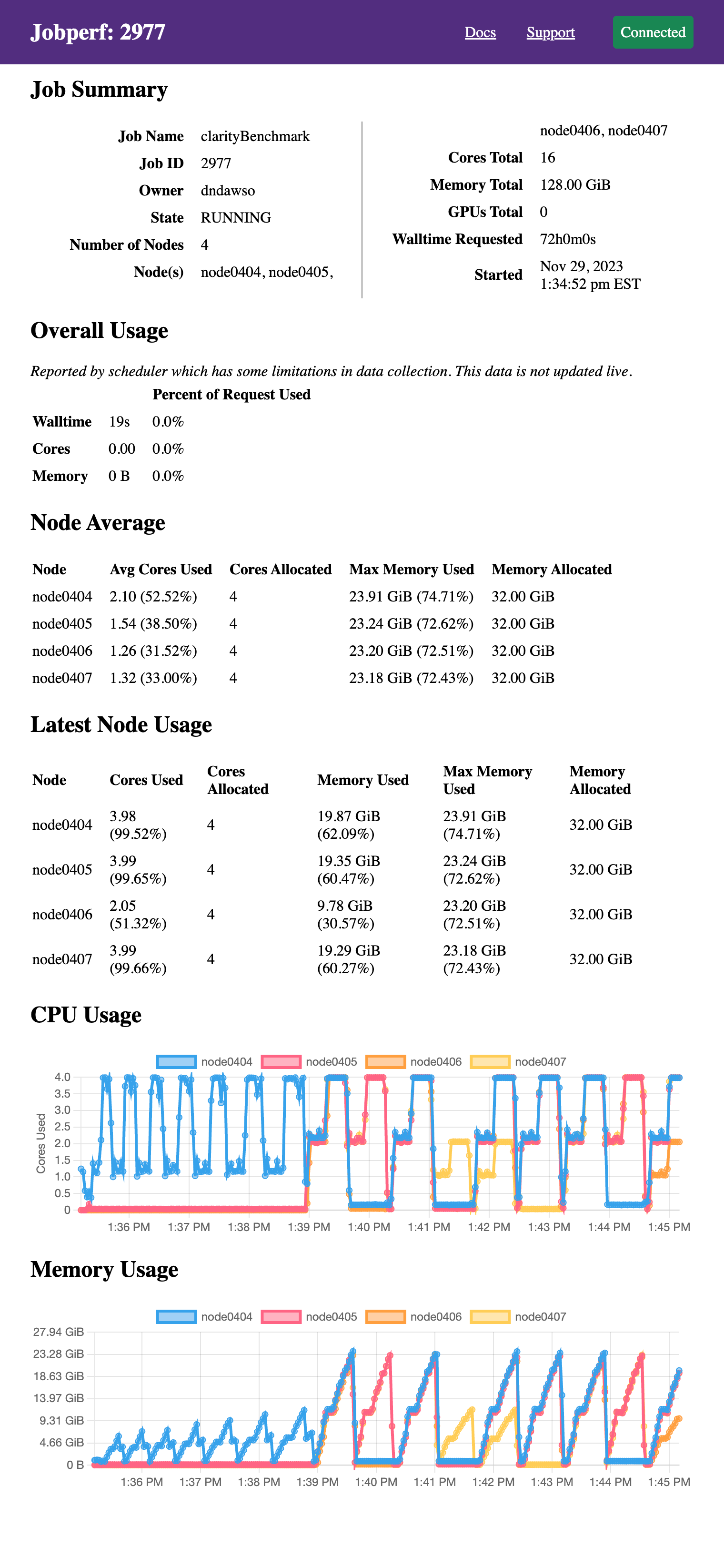

If you visit this link in your browser, you should see a screen like the following (after logging in):

It displays the same information as would be printed to the terminal if run

without the -http option, just in web form. Since we passed the -w option,

it also continues to poll resource usage and dynamically plots the resource

usage. The screenshot above was taken after several minutes (initially, the

graphs are empty). This particular job did not request GPUs, but GPU usage will

also be displayed if they were requested in the job allocation.

These plots are particularly useful in checking for patterns on resource use. This particular program goes through two overall phases:

- meshing: uses up to 4 cores ~10GB of RAM on one node.

- solving: there are now periods where it uses all the cores on all 4 nodes and up to 23-24GB per node.

Ideally, if your job has different phases with different resource requirements, you'd split them across different nodes, but alas, this is a commercial software and there is no easy way to split these phases.

Embedding jobperf in Batch Script

Jobperf can also record and later recall resource utilization. This can be useful in monitoring batch jobs. To do this, we can add a line like this to your batch job script:

jobperf -record -w -rate 10s -http &

This will:

- [

-record] Record the data into a database (default DB location is~/.local/share/jobstats.db). - [

-w -rate 10s] Poll usage every 10 seconds (rather than collect just once). You can change the 10s to be what you would like. - [

-http] Start an web server so you can see stats while the job is running.

We use the & which will run jobperf in the background (otherwise it would

block and never run the rest of your script). You do not need to pass a job ID

since it is running within a job.

For example, you might have a batch script like this:

#!/bin/bash

#SBATCH --job-name jobperftest

#SBATCH --ntasks 1

#SBATCH --cpus-per-task 2

#SBATCH --mem 2gb

#SBATCH --time 0:10:00

jobperf -record -w -http -rate 10s &

module load anaconda3/2022.10

python do_work.py

Once the job is submitted, you can display the log file to see the jobperf URL to see live statistics (as described in the HTTP Usage above).

When the job is complete, you can see the recorded statistics by running the following from a login node:

jobperf -load -http <job-id>

This will display not only the summary statistics computed by the job scheduler, but it will also the graphs with usage for each node and GPU over time.

All jobperf Options

Use the -help option for the most up-to-date list of flags supported. These

are the current flags:

-w: Watch mode. Jobperf will not immediately return, instead it will keep polling at the rate set by the-rateflag. This only works for currently running jobs.-rate <time>: Sets the polling period in watch mode. E.g.1s,500ms,10s.-record: save samples (recorded in watch mode ---w) to a database indicated by-record-db.-record-db <filename>: file to use as SQLite DB. Defaults to~/.local/share/jobstats.db.-engine <enginename>: force the use of a particular job engine (eitherpbsorslurm). By default, jobperf will try to autodetect the scheduler.-http: start an HTTP server. See HTTP Usage.-http-port: start the HTTP server with a particular port. The default is to choose an arbitrary free port.-http-disable-auth: disable authentication on the HTTP server. The default allows only the job owner to connect.-debug: prints out debug logging. If you would like to report an error withjobperfwe'd love the output of running with-debug.

Using seff to check resource usage

Slurm comes installed with a simple job efficiency script, seff. With it we

can gather an estimate on how much resources were used.

To run seff, just pass the job ID:

seff <job-id>

It will print out summary statistics and efficiency information about the job:

[dndawso@slogin001 ~]$ seff 2917

Job ID: 2917

Cluster: palmetto2

User/Group: dndawso/cuuser

State: COMPLETED (exit code 0)

Nodes: 4

Cores per node: 4

CPU Utilized: 01:06:03

CPU Efficiency: 38.76% of 02:50:24 core-walltime

Job Wall-clock time: 00:10:39

Memory Utilized: 21.93 GB

Memory Efficiency: 17.14% of 128.00 GB

GPU Usage is not tracked by the scheduler and thus not available in seff.

While you can run seff while the job is running, it likely won't be accurate

until the job is complete. Slurm does not update accounting statistics very

often.

Why does seff differ from jobperf in overall statistics?

seff differ from jobperf in overall statistics?The CPU usage should be the same in each. If there are differences, please let

us know. Memory differences are expected, however. The Slurm database does not

store a total, max memory used. Instead it stores the max memory used by any

task for each step (TresUsageInMax column in salloc).

The seff algorithm is as follows: For each step, calculate approximate max

memory usage by multiplying the memory from TresUsageInMax times the number of

tasks. It then reports the highest memory calculated of any step.

This works well in most cases.

- For single step, single task jobs, it is accurate.

- For single step, multiple task jobs, it may overestimate, but should work well if most of the step tasks are doing the same thing.

- For multiple non-overlapping step, single task jobs, it is accurate.

- For multiple non-overlapping step, multiple task jobs, it may overestimate, but should work well if most of the step tasks are doing the same thing.

- For overlapping steps, it may be quite inaccurate.

Jobperf uses a slightly different algorithm:

- Collect all start and end times for each step.

- For each collected time, t:

- Find all steps that were running (step start time <= t && end time > t)

- For each running step, compute approx max memory use as TresUsageInMax times number of tasks.

- Record this time's memory as the sum of all steps max memory used.

- Report the highest memory usage of all times.

It should provide the same numbers as seff for single step jobs, but will have

higher numbers for multiple steps if they overlap.

Using sacct to check resource usage

The sacct utility displays accounting

data from the accounting database for jobs. Once a job starts running, entries

are added to the accounting database for each step (each invocation of srun

within a job).

By default, running sacct with no arguments will show you all your jobs and

steps from today. You can look further back by passing the --starttime flag.

For example, to see jobs ran in the past 5 days, run:

sacct --starttime now-5days

You can also pass --job flag to look at just steps related to a job. For

example, if you want to see information on steps from job ID 3016, run:

sacct --job 3016

By default, sacct does not show information related to resource utilization.

We'll need to adjust the columns. These are the most useful:

TotalCPU: total CPU time used by the step.NTasks: total number of tasks in the step.TRESUsageInMax: maximum usage of resources for each task of the stop.

These options can be passed as arguments to the --format parameter of sacct.

For example, to check the usage of job ID 2917, I can use

sacct --job 2917 --format JobID,JobName,NTasks,TotalCPU,TRESUsageInMax%80

That produces the following output:

JobID JobName NTasks TotalCPU TRESUsageInMax

------------ ---------- -------- ---------- --------------------------------------------------------------------------------

2917 clarityBe+ 01:06:02

2917.batch batch 1 01:18.736 cpu=00:01:19,energy=0,fs/disk=500405988,mem=338256K,pages=1940,vmem=694264K

2917.extern extern 4 00:00.002 cpu=00:00:00,energy=0,fs/disk=2332,mem=0,pages=0,vmem=0

2917.0 jobperf 1 00:00.045 cpu=00:00:00,energy=0,fs/disk=59720,mem=4676K,pages=58,vmem=12176K

2917.1 jobperf 1 00:00.046 cpu=00:00:00,energy=0,fs/disk=60320,mem=4680K,pages=0,vmem=4680K

2917.2 jobperf 1 00:00.044 cpu=00:00:00,energy=0,fs/disk=59263,mem=4676K,pages=58,vmem=12176K

2917.3 jobperf 1 00:00.044 cpu=00:00:00,energy=0,fs/disk=59252,mem=4676K,pages=58,vmem=12172K

2917.4 svclaunch+ 1 20:52.665 cpu=00:20:53,energy=0,fs/disk=729913299,mem=22998628K,pages=524,vmem=23075964K

2917.5 svclaunch+ 1 16:21.333 cpu=00:16:20,energy=0,fs/disk=492817603,mem=21843172K,pages=490,vmem=21912144K

2917.6 svclaunch+ 1 13:14.594 cpu=00:13:13,energy=0,fs/disk=361008588,mem=22477272K,pages=566,vmem=22545836K

2917.7 svclaunch+ 1 14:15.009 cpu=00:14:14,energy=0,fs/disk=378631749,mem=21848644K,pages=543,vmem=21925064K

With this I can see step 2917.4 used 20 minutes, and 52.665 seconds of

CPU Time. It also had a task that

maxed out at 21848644 KiB (from the mem within TRESUsageInMax) = 20.83 Gib.

This is the only task within the step (NTasks=1) so the max memory used by the

step is 20.83Gib. For this particular job, steps 2917.4-2917.7 were all run at

the same time, so to get total memory used for the whole job I add the memory

usages up to get about 85GiB. Many times, steps are not run at the same time,

they are run sequentially which changes the math (you'd look at the max memory

used rather than sum).

Steps may have multiple tasks (e.g. an MPI step). For example, this step had 20 tasks:

JobID JobName NTasks TotalCPU TRESUsageInMax

------------ ---------- -------- ---------- --------------------------------------------------------------------------------

2428.0 pw.x 20 02:18:36 cpu=00:06:57,energy=0,fs/disk=4018983,mem=337392K,pages=241,vmem=3079476K

The usage listed in TRESUsageInMax is max over all tasks so to estimate total

usage, we can multiply 337392 KiB times 20 to get 6.4 GiB total.

Connect to Slurm compute nodes

For custom monitoring, you can connect directly to a Slurm compute node where

your task is running. First, you should run

squeue to find what node(s)

your job is running on. For example:

[dndawso@slogin001 ~]$ squeue -u dndawso

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

2962 work1 interact dndawso R 1-13:26:26 1 node0401

My job is running on node0401. We can then connect to this node with

ssh <node name>:

[dndawso@slogin001 ~]$ ssh node0401

Last login: Wed Nov 29 08:25:47 2023 from 10.125.60.31

[dndawso@node0401 ~]$

Once on a compute node, you can inspect your running job in a variety of ways. Useful commands are:

htop -u <username>: Lists all running processes owned by you.nvidia-smi: Shows GPU usage for any GPU's allocated to the job.

When you ssh to a node, you will be

automatically attached to a job

owned by you. If you have multiple jobs running on the node, you will be

attached to the most recently started job. If you need to access a different

job, you can use an overlapping, interactive srun instead of ssh. From the

login node, you can run:

srun --jobid <job-id> --overlap --nodelist <node-name> --pty bash -l